Hello!

My goal is to harness bleeding edge technology to tackle my community's most pressing challenges.

Current interests: building products and GTM teams to create world-changing businesses by applying foundation models to advance biology, physics, and mental health.

Career:

Head of Product @ Petuum (2022-2024)

Founding Product Manager @ Skeema (acq.) (2021-2022)

Founded Various Flawed Startups (2019-2020)

Tech Consulting @ Strata Decision Technology (2015-2019)

Contributor @ LLM360

MBA @ CMU

Selected Generative AI Projects and PoCs

AI Developer Tools

Applications & Tools

Tools including but not limited to data preparation, resource adaptive cluster scheduling, deployment, and monitoring tools for AI developers.

LLM Application Development

Applications & Tools

Adapting language models for downstream applications via LLM finetuning (e.g. math, logic, and reasoning).

Natural Language Search Systems

Applications & Tools

PoC to replace keyword based search with natural language search within a University's student intranet. Search system included unstructured text scraped from decentralized University websites and structured Salesforce objects.

Amber-7B

Foundation Model Pretraining

A 7B English language model with the LLaMA architecture

K2-65B

Foundation Model Pretraining

The largest fully-reproducible large language model outperforming Llama 2 70B using 35% less compute.

Crystal-7B

Foundation Model Pretraining

A 7B LLM balancing natural language and coding ability to extend the Llama 2 frontier.

METAGENE-1

Genomics Foundation Model

A 7B parameter metagenomic foundation model designed for pandemic monitoring

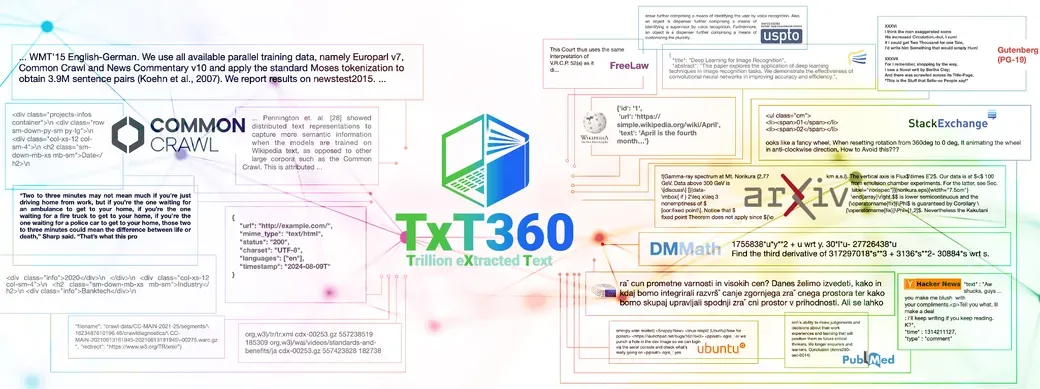

TxT360

Data Preparation

A globally deduplicated 5T token dataset for LLM pretraining.

LLM Training and Inference Benchmarking

LLM Performance and Operations

Hardware and software training and inference benchmarking for optimal cluster configurations depending on available machines, models, sequence lengths, and batch sizes.

Publications

METAGENE-1: Metagenomic Foundation Model for Pandemic Monitoring

We pretrain METAGENE-1, a 7-billion-parameter autoregressive transformer model, which we refer to as a metagenomic foundation model, on a novel corpus of diverse metagenomic DNA and RNA sequences comprising over 1.5 trillion base pairs.*

*Not an author. Assisted with release and editing.

Towards Best Practices for Open Datasets for LLM Training

Many AI companies are training their large language models (LLMs) on data without the permission of the copyright owners. The permissibility of doing so varies by jurisdiction: in countries like the EU and Japan, this is allowed under certain restrictions, while in the United States, the legal landscape is more ambiguous.

LLM360 K2: Building a 65B 360-Open-Source Large Language Model from Scratch

We detail the training of the LLM360 K2-65B model, scaling up our 360◦ Open Source approach to the largest and most powerful models under project LLM360.

TxT360: a 5T token globally deduplicated dataset for LLM pretraining

We introduce TxT360 (Trillion eXtracted Text), the first dataset to globally deduplicate 99 CommonCrawl snapshots and 14 high-quality data sources from diverse domains (e.g., FreeLaw, PG-19, etc.).

LLM360: Towards Fully Transparent Open-Source LLMs [COLM '24]

The recent surge in open-source Large Language Models (LLMs), such as LLaMA, Falcon, and Mistral, provides diverse options for AI practitioners and researchers. However, most LLMs have only released partial artifacts, such as the final model weights or inference code, and technical reports increasingly limit their scope to high-level design choices and surface statistics.

Tab.do: Task-Centric Browser Tab Management [UIST '21]

The nature of people’s online activities has gone through dramatic changes in past decades, yet browser interfaces have stayed largely the same since tabs were introduced nearly 20 years ago. The divide between browser interfaces that only provide affordances for managing individual tabs and users who manage their attention at the task level can lead to an “tab overload” problem. We explored a task-centric tab manager called which enables users to save their open tabs to manage them with task structures and affordances that better reflect their mental models. To lower the cost of importing, Tabs.do uses a neural network in the browser to make suggestions for grouping users’ open tabs by tasks.